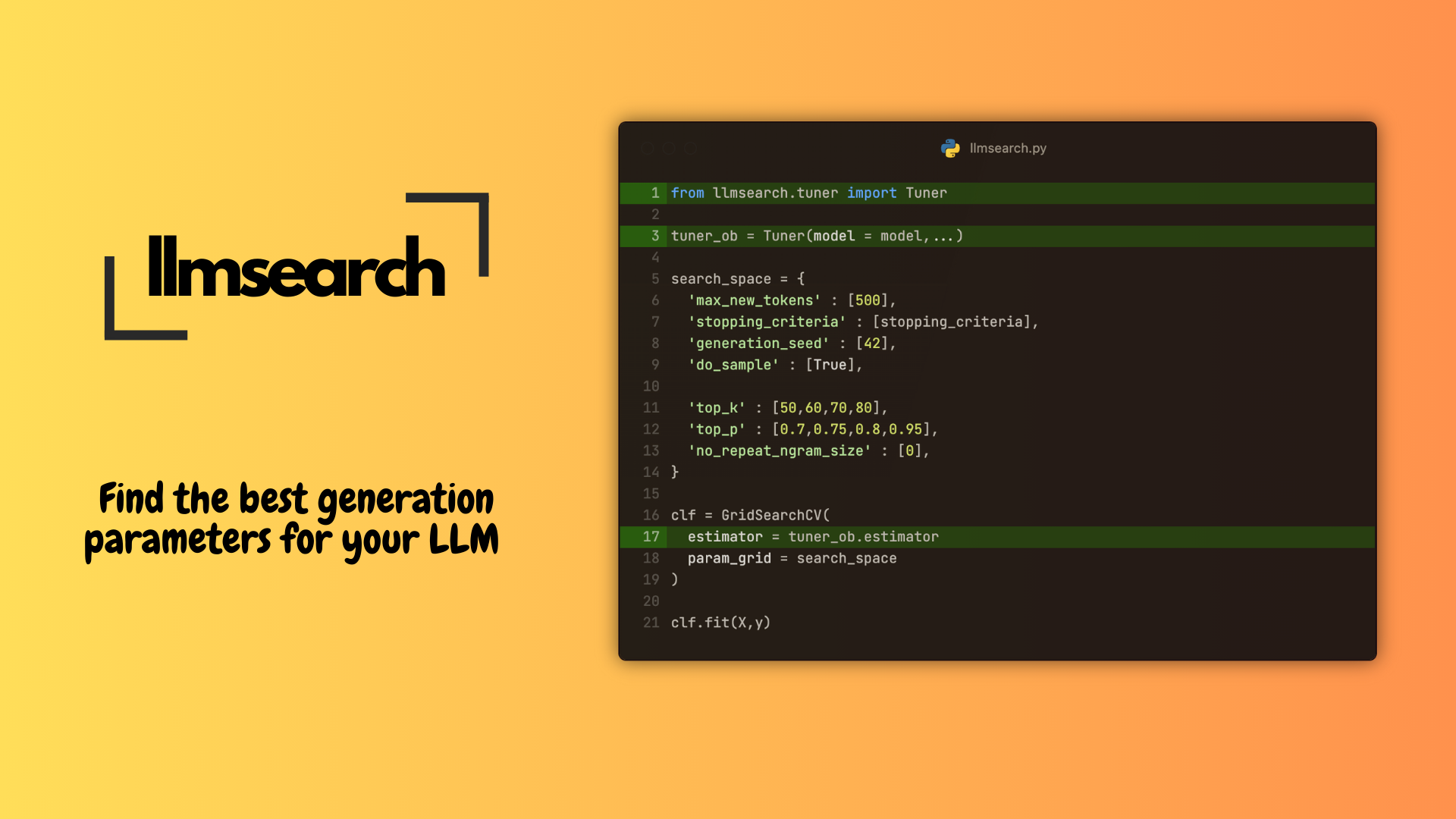

Package llmsearch

Conduct hyperparameter search over generation parameters of large language models (LLMs). This tool is designed for ML practitioners looking to optimize their sampling strategies to improve model performance. Simply provide a model, dataset, and performance metric, llmsearch handles the rest.

QuickStart

- llama-3-8b Example

- A quickstart notebook which shows the basic functionality of llmsearch. This notebook will help you understand how to quickly set up and run hyperparameter searches.

End-to-End Model Examples

- GSM8K Example - Shows a

GridSearchCVran on the GSM8K Dataset using theTheBloke/CapybaraHermes-2.5-Mistral-7B-AWQmodel. - Samsum Example - Shows a

GridSearchCVran on the samsum Dataset using a finetuned(on the same dataset) version ofcognitivecomputations/dolphin-2.2.1-mistral-7b.

Refer README further for more details on how to use llmsearch.

API Reference Documentation

Sub-modules

llmsearch.patches-

Module which is used to monkey patch

transformerslibrary. llmsearch.scripts-

Useful utility scripts such as Multi Token Stopping Criteria.

llmsearch.tuner-

Core Tuner Class that adapts a PyTorch model to function as a scikit-learn estimator.

llmsearch.utils-

Contains useful utilities that help with the functioning of llmsearch.